Generative AI Apps on Your Phone: A Privacy Wake-Up Call

Imagine you’re chatting with your favorite AI buddy about weekend plans—meanwhile, your phone quietly streams a memoir of your life to a digital brain that never forgets.

Generative AI apps like ChatGPT, Google Gemini, Meta AI, and Perplexity are helpful—but they also require a substantial amount of data. From your calendar events and smart home routines to that sleepy Spotify playlist you queue up at 10:47 p.m. sharp, they might be using more than you realize.

Even when anonymized, real-world examples from user interactions can occasionally appear in model output. In rare instances, models might accidentally produce content that resembles private user data, especially if that data was part of the training set.

So yes, your data might help generate thoughtful responses for you, but it could also influence answers for thousands of other users.

What Apps Might Be Collecting—With Your Permission

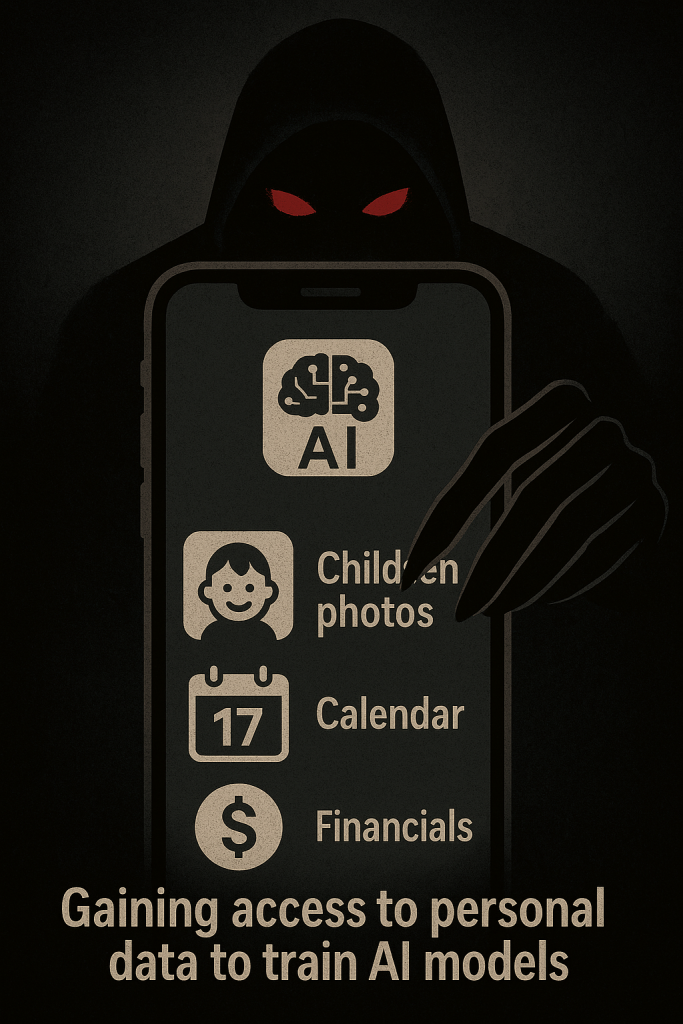

Hidden behind cheerful UIs and helpful prompts is a cascade of data collection. Once installed on your phone, these apps often get access to:

- Your prompts and chats—yes, even the heartfelt one about your children

- Photos—metadata can reveal your location and identity

- Calendar events—great for inferring routines and emotional states

- Financial apps—granted access can expose transaction history and spending habits

- Location data—IP and GPS info used for behavioral profiling

- Device details—browser, operating system, and usage patterns

According to OpenAI’s privacy policy, ChatGPT may utilize your input—including prompts, uploads, and interactions—to improve its models unless you explicitly choose to opt out. This means even sensitive or unintentional information could be used to generate responses for others in the future.

Why Mobile Access Is Riskier Than You Think

On phones, access is more profound and often ongoing. If you’ve granted permission to calendars, photos, or finance apps, it’s like whispering your secrets into a caffeinated algorithmic ear.

- Your calendar paints a picture of your daily life.

- Your photos might expose habits, locations, and social circles.

- Your financial apps can flag patterns that advertising networks crave.

This data doesn’t just power your AI; it may also be shared with affiliates or vendors for purposes such as service improvement, fraud detection, and targeted advertising. You didn’t sign up for surveillance, but sometimes, that’s what you get.

5 Ways to Protect Your Digital Self

- Limit App Permissions

- Go to Settings → Privacy → App Permissions (iOS or Android)

- Turn off access to your calendar, photos, microphone, and location unless essential.

- Turn Off Training and Chat History

- In ChatGPT: Settings → Data Controls → Disable “Chat History & Training.”

- Use Temporary Chat mode for anything personal.

- Keep Sensitive Info Out of Prompts

- Avoid typing names, financial details, or private documents.

- Assume everything you input might become part of someone else’s prompt.

- Use a VPN & Privacy-Focused Browser

- VPNs mask your IP and location.

- Try Firefox or Brave to reduce tracking.

- Read the Privacy Policy

- Know what’s being collected, why, and how it’s shared.

- Stay informed so you can stay in control.

Final Thought: Your phone isn’t just a device—it’s your digital DNA. Generative AI apps might be brilliant sidekicks, but they aren’t harmless. Awareness is your shield. Before sharing your life story in a prompt, take 30 seconds to review your settings.

Leave a Reply